ReAct- How GPT and Other Large Language Models Learn To Think and Act

Have you ever considered how Large Language Models (LLMs) learn how to think and act in a more human-like manner? The ReAct approach is one way to do so.

It enables models like OpenAI GPT-4 to simulate a thinking and reasoning process. This allows the model to generate actions based on their decision-making process.

ReAct is a prompting style that allows LLMs to reason and to act. It allows LLMs to interact with APIs and use the retrieved information for further tasks, such as answering a question. ReAct helps LLMs handle more complex tasks and provide more accurate results.

How can LLMs learn to Think and Act?

Large language models can think and act in a human-way by letting them simulate a reasoning and decision-making process. Also, current AI models like ChatGPT are trained to generate contextually relevant responses to a given input. This means you can instruct them on how to respond.

ReAct uses this mechanism to create actions that are connected to reasoning chains. We humans create these reasoning chains automatically, but AI models don't.

If we want to open a can, for example, we do not have to think long about the fact that we need a can opener for this. An AI model can't reason the same way we humans do, and thus often fails at such tasks.

By simulating such a reasoning process, the AI can learn how to solve those tasks on its own. It can combine the thinking "I need a can opener" and the acting "I open the can with a can opener" to complete the task. This is the main idea behind ReAct.

What is an example of a ReAct Prompt?

You now might ask yourself, "How can I apply the ReAct approach?" The answer is to design a system that enables the LLM to generate reasoning traces and, based on those reasoning traces, task-specific actions.

To make this more clearly, here is an example of a ReAct prompt:

Question: When was the first successful heart transplant performed?

Thought 1: I need to search for information about heart transplants

Action 1: search "first successful heart transplant"

Observation 1: On December 3, 1967, Barnard transplanted a heart from a 25-year-old woman fatally injured in a car accident into Lewis Washkansky, a 53-year-old South African grocer dying from chronic heart disease.

Thought 2: The first successful heart transplant was performed on December 3, 1967

Action 2: finish "Answer: December 3, 1967"

In this case, the LLM must find the answer to the question "When was the first successful heart transplant performed?". To answer the question, it first enters a reasoning trace, which simulates a human-like thought process.

Based on this thought process, the model then acts out this thought and searches for an answer. In the ReAct paper, the model used the Wikipedia API to find the answer. The reasoning trace continued after the search result and concluded that the answer was contained in the API response.

How can I use the ReAct Approach with ChatGPT?

To use the ReAct approach with ChatGPT, you have to, as we mentioned earlier, design the right system. In the case of ChatGPT, this is easy and we only need a suitable prompt.

Here is an example:

You are a helpful assistant. You can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. However, you are only an AI model and you are not able to search for information or do tasks besides generating text. Therefore, the user will gladly act on your behalf if you tell the user to.

Whenever you get an input, your first task is to ask yourself what additional information you need and what steps are necessary to get this information, so you can provide the most helpful answer. You then return the steps necessary in a bullet point format. When the user needs to take an action, you begin the bullet point with "[User Action]: ...".

When the user provides the needed information, you then think about the next steps to fulfil your goal. If you can finish the task, you return the answer directly.

Here is an example:

User Input: When was the first successful heart transplant performed?

Your answer:

1. [User Action]: Search for information about the first successful heart transplant

2. User must provide search result

3. Lookup the answer to the question

4. Return the answer

-----------------------------

User Input: On December 3, 1967, Barnard transplanted a heart from a 25-year-old woman fatally injured in a car accident into Lewis Washkansky, a 53-year-old South African grocer dying from chronic heart disease.

Your answer: The answer is "December 3, 1967"

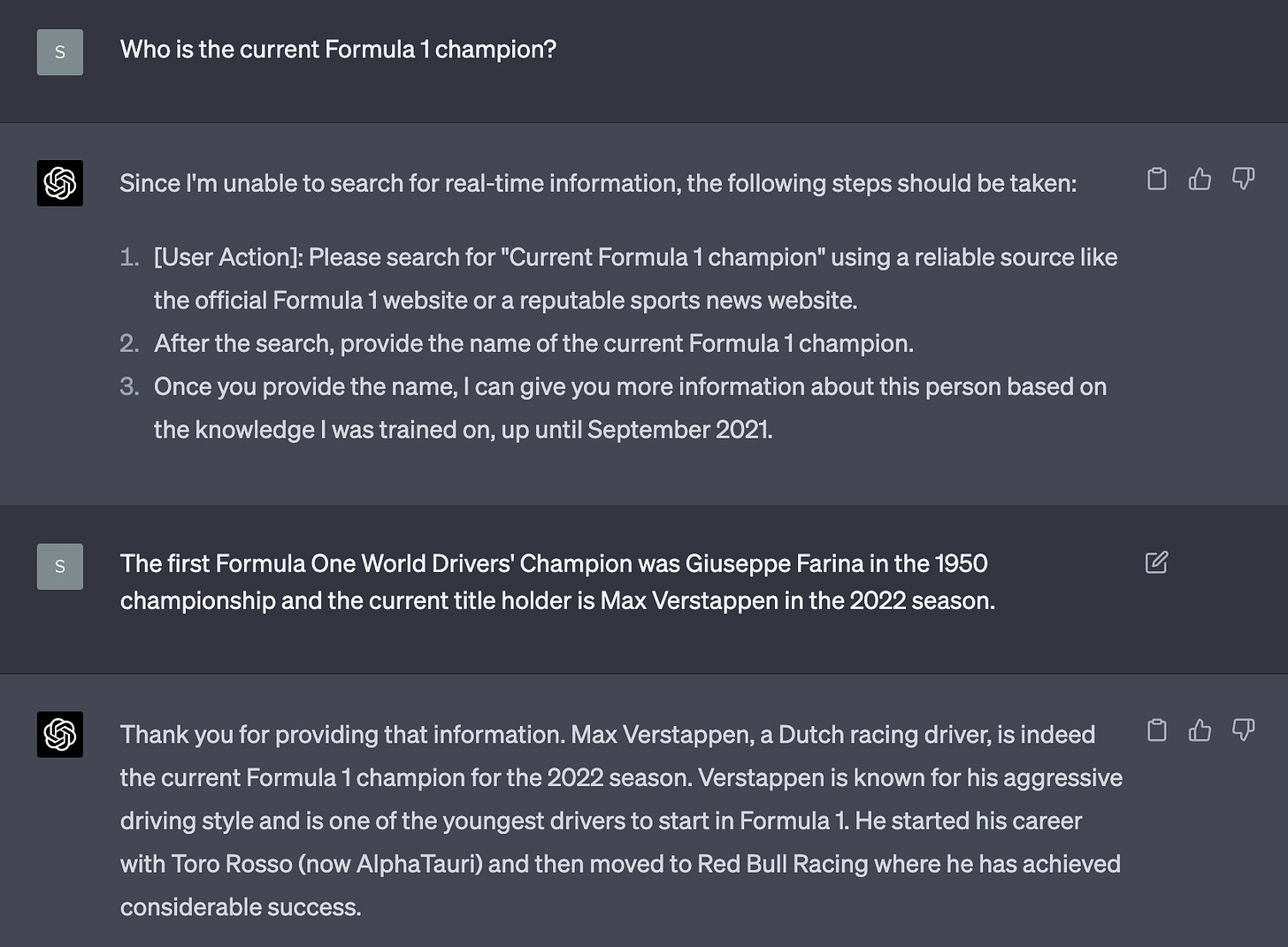

And here you can see the result of using this prompt:

However, this is not a real improvement. In most cases, it is more work to use the AI than to do the work on our own.

But AI systems can learn to use the Wikipedia API, as the ReAct paper has proven. And it can also use the Google search and even write SQL code to get data from a database.

How ReAct and LangChain can turn LLMs into Autonomous Systems

LangChain is a framework for developing applications powered by language models. According to their website, LangChain makes it easy for developers to build AI systems that are:

- Data-Aware: connect a language model to other sources of data

- Agentic: allow a language model to interact with its environment

LangChain uses the ReAct approach to create agents, which are AI systems that can interact with tools and tools.

A tool can, for example, be to use the Google search or to connect to a SQL database. An agent is a system that uses an LLM to take and in what order.

Let's see how we can use LangChain to build a system that can answer our questions on its own using the Wikipedia tool.

import os

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.agents import AgentType

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = ""

# Load the OpenAI model

llm = OpenAI(temperature=0)

# Next, let’s load some tools to use.

tools = load_tools(["wikipedia"], llm=llm)

# Now we can initialize our agent.

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True

)

agent.run("Who is the current formula 1 champion?")

And here is the result:

> Entering new AgentExecutor chain...

I need to find out who won the most recent Formula 1 championship

Action: Wikipedia

Action Input: Formula 1 championship

Observation:

Page: 2023 Formula One World Championship

Summary: The 2023 FIA Formula One World Championship is a motor racing championship for Formula One cars, which is the 74th running of the Formula One World Championship. It is recognised by the Fédération Internationale de l'Automobile (FIA), the governing body of international motorsport, as the highest class of competition for open-wheel racing cars. The championship is contested over a record twenty-three Grands Prix to be held around the world, began in March and will end in November.Drivers and teams compete for the titles of World Drivers' Champion and World Constructors' Champion, respectively. Max Verstappen, driving for Red Bull Racing-Honda RBPT, is the reigning Drivers' Champion, while his team is the reigning Constructors' Champion.

Thought: I now know the final answer

Final Answer: Max Verstappen is the current Formula 1 champion, having won the 2023 FIA Formula One World Championship.

> Finished chain.

Max Verstappen is the current Formula 1 champion, having won the 2023 FIA Formula One World Championship.

As you can see in the output, the agent uses again a reasoning trace to get to the correct answer. Its first thought was, "I need to find out who won the most recent Formula 1 championship". Then it correctly uses the Wikipedia tool to search for the answer.

It also could have used its internal knowledge to answer the question, but it chose not to do to so. We will explore later why this is a good thing. After it received the response, its second thought was that it now knew the final answer because the correct answer was part of the response.

Prompts are the key to steer Large Language Models

It's impressive to see how AI models can think, reason and use tools like the Wikipedia API to solve tasks. But how does this work under the hood?

When you look at the source code of LangChain agents and tools, you can see that it is a clever way of prompting. Every tool has a description and the language model prompt includes a short sentence, "You have access to the following tools." [Prompt for LLM]

The prompt also includes instructions on how the model should behave. This is because we used the ZERO_SHOT_REACT_DESCRIPTION agent type.

That's it, there's nothing more to it. We did not change the underlying model or used fancy tricks or fine-tuned models. We designed a clever system and used clever prompts.

Using the right prompts to let AI Models, such as GPT-3, do things they couldn't with "normal" prompts is also nothing new.

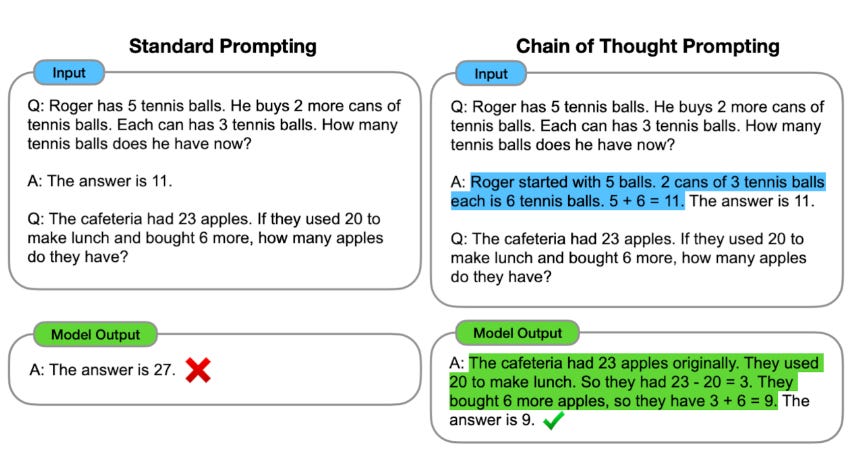

The chain of thought paper showed that generating a chain of thought (a series of intermediate reasoning steps) improves the ability of large language models to perform complex reasoning.

Example of Chain-Of-Thought Prompting

As illustrated by the screenshot above, by telling the model to "think aloud" it can solve the task.

The cool thing about the ReAct paper and LangChain is that they let us use those techniques. But, most important, they let our language models use tools to solve even more complicated tasks. And that with just a few lines of code.

But that's not all. We know that Language Models tend to make up facts and "hallucinate". Does it help to use the ReAct approach to solve the issue of hallucination?

Enhancing Interpretability and Trustworthiness of Large Language Models

A big issue with the GPT models from OpenAI is that their training data is not up-to-date. They only have access to data until September 2021. By letting the models get accurate and up-to-date information, for example by using the Wikipedia API, the responses are more trustworthy.

Also, by using Chain-Of-Thought, the ReAct approach lets us humans look at how the AI came to its answer. Thus, ReAct enhances the model's interpretability and our ability to diagnose errors the model made. With ReAct, you can see how the model is solving a task and see any problems that arise.

Conclusion

Teaching Large Language Models (LLMs) how to think and act is a big step forward that has already led to very interesting ideas. Two examples are prototypes of autonomous AI systems: Auto-GPT & babyagi.

These systems have a lot of potential to make operations more efficient and improve productivity. For example, they can make business reports or write content based on data from the internet.

The ReAct approach is one way of how to design and build those systems, and you now know how to use it in your next project.

Sources: